Surveillance Graphs

Vulgarity and Cloud Orthodoxy in Linked Data Infrastructures

(bibtex)

@article{doi/10.17613/syv8-cp10,

doi = {10.17613/syv8-cp10},

url = {https://hcommons.org/deposits/item/hc:54749/},

author = {Saunders, Jonny L.},

keywords = {AI Ethics, digital surveillance, information infrastructure, Knowledge Graphs, Large Language Models, Linked Data, Semantic Web},

title = {Surveillance Graphs: Vulgarity and Cloud Orthodoxy in Linked Data Infrastructures},

publisher = {hcommons},

year = {2023},

copyright = {Creative Commons Attribution Share Alike 4.0 International}

} Information is power, and that power has been largely enclosed by a handful of information conglomerates. The logic of the surveillance-driven information economy demands systems for handling mass quantities of heterogeneous data, increasingly in the form of knowledge graphs. An archaeology of knowledge graphs and their mutation from the liberatory aspirations of the semantic web gives us an underexplored lens to understand contemporary information systems. I explore how the ideology of cloud systems steers two projects from the NIH and NSF intended to build information infrastructures for the public good to inevitable corporate capture, facilitating the development of a new kind of multilayered public/private surveillance system in the process. I argue that understanding technologies like large language models as interfaces to knowledge graphs is critical to understand their role in a larger project of informational enclosure and concentration of power. I draw from multiple histories of liberatory information technologies to develop Vulgar Linked Data as an alternative to the Cloud Orthodoxy, resisting the colonial urge for universality in favor of vernacular expression in peer to peer systems.

- Introduction

- Knowledge Graphs: A Backbone in the Surveillance Economy

- Public Graphs, Private Profits

- Infrastructural Ideologies

- References

With Gratitude To…

- Ed Summers - @edsu@social.coop - for providing context on Linked Data history, among other topics.

- Fabián Heredia - @fabianhjr@sunbeam.city - for recommending a number of resources on FOSS exploitation.

Note:

This piece is an extension of “Linked Data or Surveillance Capitalism” in Decentralized Infrastructure for (Neuro)science [1] and reproduces text from it in whole and in part.

Feedback, contribution, and criticism are welcome!

Introduction

The world is Big Data, and The Cloud is its landlord. It is our responsibility to ferret it out of its primitive unknown, mine it, harvest it, dump it by the tanker-truckful into great Data Lakes overhung by computational Clouds to refine the Actionable Insights from its desiccated husk. The Cloud promises us an infinite, seamless expanse of Knowledge. If only we can harness the wily spray of our Organic Content, filtering our every action, affection, and affiliation through a thicket of algorithmically optimized platforms then The Cloud might teach us enough about ourselves to finally be happy. Information by its many names is the central quilting point for contemporary capitalism (eg. see [2]), and like prior assemblages of capital is thick with contradiction. It is historically contingent and inevitable, material and transcendent, a concrete set of technologies and techniques as well as a web of belief systems, power, and dreams. The Cloud now dreams of a great Knowledge Graph of Everything, to Dissolve the Silos that keep the Bigness of Data from teaching us all we could know. It tells us this is important for the fate of humanity.

The Knowledge Graph of Everything and all that it promises is a mirage, though. Its history is that of “primitive accumulation” of informational capital, the widening of informational asymmetries, and the logical conclusion of a model of digital serfdom where we are promised glimpses of unimaginable computational power through the pinhole lens of platforms for rent. With the enclosure of the web nearly total, and our ability to imagine it in any other form eclipsed, information conglomerates now position themselves as information “Infrastructures” rather than mere Platforms [3, 4]. The politics, property and power relationships of the contemporary web recede into the background of always-on elastic computation. Public resources are rallied to build seemingly public data infrastructures to feed far-flung facets of public life to systems built for decidedly private profit. Aside from the pathological nature of the Knowledge Graph of Everything as a colonial vision of all data being put in its one True order, it is impossible and won’t work. Instead, by uncritically adopting the logic of The Cloud, governments and academics will be led along by the nose just long enough to build critical mass for an interlocking set of platforms that ratchet us ever further into the captivity of surveillance.

Approaching the information-surveillance-platform archipelago through knowledge graphs gives us an underexplored lens with which to understand the politics of contemporary data infrastructures. Their history, the development from the liberatory ambitions of the Semantic Web and Linked Data into the panoptical data systems of the surveillance economy, is rich with ‘paths not taken’ from which we can reimagine a future. Two contemporary projects from the National Institutes of Health (NIH) and National Science Foundation (NSF) illustrate the ways our ambitions for public data infrastructures are steered by the constraints of the cloud and the imminent capacity for harm that poses. Rather than some obscure squabble between academics, public knowledge graph projects intersect squarely with the ideological foundation of The Cloud along with the parallel strains of “AI” to show how Large Language Models (LLMs) are the tools for the next great extension of surveillance capitalism and re-entrenchment of informational dominance.

The past, present, and future of knowledge graphs give us the pieces to articulate a properly human data infrastructure as vulgar linked data. Predicated on relationality, heterogeneity, distribution of power, and vernacular expression, vulgar linked data infrastructures attempt to empower people to socially organize information in a truly decentralized sociotechnological commons, rather than empowering systems to rent knowledge organization for profit.

Knowledge Graphs: A Backbone in the Surveillance Economy

Knowledge graphs as a technology are relatively straightforward to define [5, 6, 7, 8] (though see [9]): directed, labeled graphs consisting of nodes corresponding to entities like a person, dataset, location, etc. and edges that describe their relationship1. Knowledge graphs typically make use of some controlled ontology that provides a specific set of terms for nodes and edges and how they are to be used, and “types” that give a given entity an expected set of properties represented by edges. This makes for an extremely general data structure, where heterogeneous data can form a continuous graph in a way that is both structured and can accommodate ad-hoc modification not anticipated by a schema. For example, in Wikidata, Peter Kropotkin (Q5752) is an instance of the “human” type, which has properties like sex or gender (male) and place of birth (Moscow), but also has additional properties not in the human type like signature. Each of the “edges” like place of birth link to other nodes like Moscow, which in turn have their own sets of links, and so on.

Knowledge graphs are in themselves a fairly ordinary class of data structures and technologies, but their history is the story of the enclosure of the wild and open web into a series of surveillance-backed platforms.

Semantic Web: Priesthoods

The term “Knowledge Graph” evolved out of the Semantic Web project [6], and so we rewind to the start point of our history at the end of the 90’s. It is difficult to reconstruct how radical the notion of a collection of documents organized by arbitrary links between them was at dawn of the internet. At the time, the infrastructures of linking documents looked more like ISBNs, carefully regulated by expert, centralized authorities2. Being able to just link to anything was terrifying and new (eg. [10, 11]).

The initial design of the web imagined it as a self-organizing process, where people would maintain their own websites and organize a collection of links to other websites3. It became clear relatively quickly that the anarchy of a socially self-organizing internet wasn’t going to work as planned, where without a formal system of organization “people were frightened of getting lost in it. You could follow links forever.” [12]

Like the radical nature of linking on the web, it’s difficult to remember that the web as surveillance apparatus thinly veiled as the five or so remaining platform-websites was not inevitable. The pre-dotcom bust internet of the 90’s and early 2000’s was far from the commercialized wasteland we know today. Ed Horowitz, CEO of Viacom explained in 1996: “The Internet has yet to fulfill its promise of commercial success. Why? Because there is no business model” [13]. Google’s AdWords being a defining moment in the development of surveillance capitalism is a story already told [14]: taking advantage of the need for search generated by the disorganization of the web, AdWords turned personal search data into a profit vector by selling targeted space in the results.

The significance of the relationship between search, the semantic web, and what became knowledge graphs is less widely appreciated. The semantic web was initially an alternative to monolithic search engine platforms - or, more generally, to platforms in general [15]. It imagined the use of triplet links and shared ontologies at a protocol level as a way of organizing the information on the web into a richly explorable space: rather than needing to rely on a search bar, one could traverse a structured graph of information [16, 17] to find what one needed without mediation by a third party.

The Semantic Web project was an attempt to supplement the arbitrary power to express human-readable information in linked documents with computer-readable information. It imagined a linked and overlapping set of schemas ranging from locally expressive vocabularies used among small groups of friends through globally shared, logically consistent ontologies. The semantic web was intended to evolve fluidly, like language, with cultures of meaning meshing and separating at multiple scales [18, 19, 20]:

Locally defined languages are easy to create, needing local consensus about meaning: only a limited number of people have to share a mental pattern of relationships which define the meaning. However, global languages are so much more effective at communication, reaching the parts that local languages cannot. […]

So the idea is that in any one message, some of the terms will be from a global ontology, some from subdomains. The amount of data which can be reused by another agent will depend on how many communities they have in common, how many ontologies they share.

In other words, one global ontology is not a solution to the problem, and a local subdomain is not a solution either. But if each agent has uses a mix of a few ontologies of different scale, that is forms a global solution to the problem. [18]

The Semantic Web, in naming every concept simply by a URI, lets anyone express new concepts that they invent with minimal effort. Its unifying logical language will enable these concepts to be progressively linked into a universal Web. [19]

This free form goal of expression for expression’s sake was always in tension with another part of the vision - serving as a backbone for AI “agents” that could compute emergent function from the semantic web. Succinctly: “Human language thrives when using the same term to mean somewhat different things, but automation does not.” [19] This tension persists through the broader history of the web, and we will return to it soon.

Linked Data: Platforms

Much of the work of the semantic web project in the early 2000s focused on the “global” side of this tension at the expense of the “local” - creating ontologies and related technologies intended to serve as a foundation for expressing basic things in a common vocabulary [6]. This work had many successes, but began a schism between the priesthood of people concerned with making systems that were correct and those that were more concerned with making things that worked - or supported “local” expression (eg [21]). Aaron Swartz captured this frustration in his unfinished book:

Instead of the “let’s just build something that works” attitude that made the Web (and the Internet) such a roaring success, they brought the formalizing mindset of mathematicians and the institutional structures of academics and defense contractors. They formed committees to form working groups to write drafts of ontologies that carefully listed (in 100-page Word documents) all possible things in the universe and the various properties they could have, and they spent hours in Talmudic debates over whether a washing machine was a kitchen appliance or a household cleaning device. [22]

Lindsay Poirier describes this difference in “thought styles” as a rift between the “neats” focused on universalizing a priori ontologies and the “scruffies” focused on everyday use and letting the structure appear afterwards [23]. The latter characterizes the “second age” of the Semantic Web after 2006 - the reorganization around Linked Data [16, 6]. The era of Linked Data de-emphasized the idealistic and ideological goals of the early Semantic Web, driven more by an empirical approach of trying to realize these systems on the wilds of the web, creating some of the first public “Linked Open Data” systems like DBPedia and Freebase.

This turn coincides with the emerging platformatization and enclosure of the web as “Web 2.0.” Throughout the early 2000s, the work of the Semantic Web project was largely invisible to the ordinary web user, and its vision of a self-organizing web was easily outcompeted by the now-ubiquitous use of search engines to index the web. Where in the early 2000s web architects were imagining the future of web continuing to take place on free and open protocols, the Linked Data/Web 2.0 era corralled us into a pattern of platforms which quickly ratcheted their way to dominance in a positive feedback loop of user experience design, network effects, and profit. On platforms, rather than a system that “belongs” to everyone, you are granted access to some specific set of operations through an interface so that you can be part of a social process of producing and curating information for the platform holder. Shifting focus from the idealistic vision of public, protocol-driven self-organization to platforms for declaring and consuming semantic web data resulted in a lot of functional tools, but also ripened the project for capture.

Knowledge Graphs: Panoptica

In 2010 Google acquired Metaweb and its publicly-edited Semantic Web database Freebase, and in 2012 repackaged it and the ideas of Linked Data as what it called a Knowledge Graph — the third era of the Semantic Web [24, 25]. Freebase only made up part of it, and the full extent of Google’s Knowledge Graph is unknown, but its most visible impact are the factboxes that present structured information about the subjects of searches - like biographical information in a search for a person, or the different widgets for contextual interaction like restaurant reservations4 [26]. Knowledge Graphs still share the same underlying structure — triplet graphs with ontologies — even if they occupy a broader space of implementations and technologies. What differs is the context and intended use: the “worldview” of the knowledge graph.

Beyond the obvious product-level features it supports, Google’s acquisition of Freebase and the structure of its Knowledge Graph represent at least two deeper shifts in the trajectory of the Semantic Web and the broader internet: the privatization of technologies with initially liberatory aspirations, and an early template of the all too familiar sprawling, surveillance-driven information conglomerate.

The form of of the semantic web that emerged as “Knowledge Graphs” flipped the vision of a free and evolving internet on its head. The mutation from “Linked Open Data” [16] to “Knowledge Graphs” is a shift in meaning from a public and densely linked web of information from many sources to a proprietary information store used to power derivative platforms and services. The shift isn’t quite so simple as a “closure” of a formerly open resource — we’ll return to the complex role of openness in a moment. It is closer to an enclosure, a domestication of the dream of the Semantic Web. A dream of a mutating, pluralistic space of communication, where we were able to own and change and create the information that structures our digital lives was reduced to a ring of platforms that give us precisely as much agency as is needed to keep us content in our captivity. Links that had all the expressive power of utterances, questions, hints, slander, and lies were reduced to mere facts. We were recast from our role as people creating a digital world to consumers of subscriptions and services. The artifacts that we create for and with and between each other as the substance of our lives online were yoked to the acquisitive gaze of the knowledge graph as content to be mined. We vulgar commoners, we data subjects, are not allowed to touch the graph — even if it is built from our disembodied bits.

The same technologies, with minor variation, that were intended to keep the internet free became emblematic of and coproductive with the surveillance/platform model that has enclosed it. Beyond Google, knowledge graphs are an elemental part of the information economy. Banks, militaries, governments, life science corporations, journalists, everyone is using knowledge graphs [27, 28]. Their ubiquity is not an accident, one of many possible data systems that could have fit the bill, but reflects and reinforces basic patterns of the information economy and the corporations within it. Conveniently, semantic web technologies, designed to accommodate the infinitely heterogeneous, multiscale nature of free and unmediated social structuring of information are also quite useful for the indefinitely expanding dragnet of data collection that defines the operation of contemporary capitalism.

Data companies — most major companies5 — need to store and maintain massive collections of heterogeneous data across their byzantine hierarchies of executives, managers, and workers. This gigantic haunted ball of data is not just a tool, but the substance of the company. A data company persists by exploiting the combinatorics of its data hoard, spinning off new platforms that in turn maintain and expand access to data by creating captive data subjects6. As it expands, a conglomerate will acquire many new sources and modalities of data and need to integrate them with its existing data.

Knowledge graphs are particularly well suited for this “data integration” problem. A full technical description is out of scope here, but briefly: traditional relational database systems can be very difficult to modify and refactor, and that difficulty increases the larger and more complex a database is7. One has to design the structure of the anticipated data in advance, and the abstract schematic structure of the data is embedded in how it is stored and accessed. It is particularly difficult to do unanticipated “long range” analyses where very different kinds of data are analyzed together.

In contrast, merging graphs is more straightforward8 [5, 28, 29, 30, 31, 32, 33, 34] - the data is just triplets, so in an idealized case9 it is possible to just concatenate them and remove duplicates (eg. for a short example, see [35, 36]). The graph can be operated on locally, with more global coordination provided by ontologies and schemas, which themselves have a graph structure [37]. Discrepancies between graphlike schema can be resolved by, you guessed it, making more graph to describe the links and transformations between them. Long-range operations between data are part of the basic structure of a graph - just traverse nodes and edges until you get to where you need to go - and the semantic structure of the graph provides additional constraints to that traversal. Again, a technical description is out of scope here, graphs are not magic, but they are well-suited to merging, modifying, and analyzing large quantities of heterogeneous data10.

So if you are a data broker, and you just made a hostile acquisition of another data broker who has additional surveillance information to fill the profiles of the people in your existing dataset, you can just stitch those new properties on like a fifth arm on your nightmarish data Frankenstein.

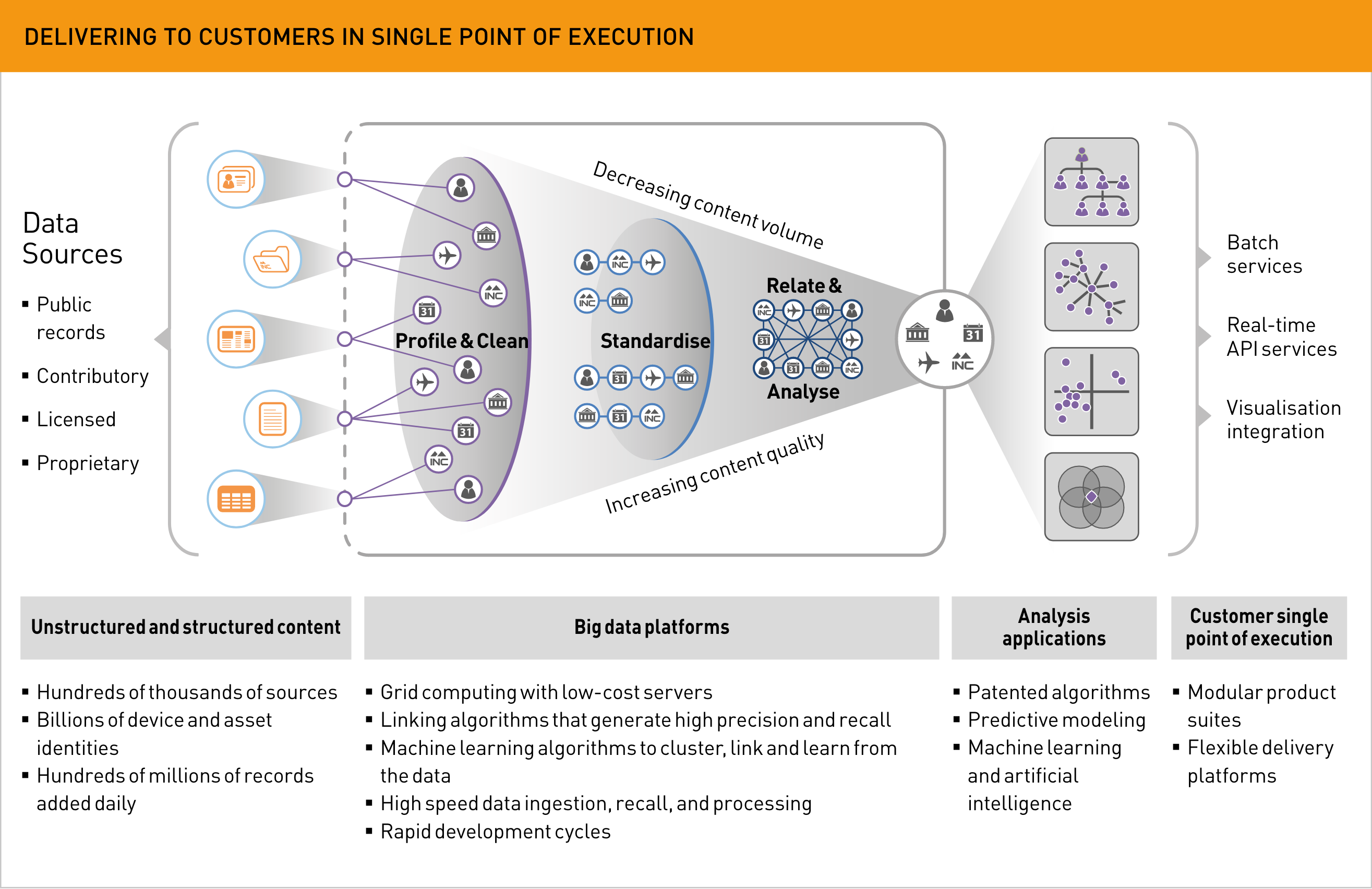

What does this look like in practice? While in a bygone era Elsevier was merely a rentier holding publicly funded research hostage for profit, its parent company RELX is paradigmatic of the transformation of a more traditional information rentier into a sprawling, multimodal surveillance conglomerate (see [38]). RELX proudly describes itself as a gigantic haunted graph of data:

Technology at RELX involves creating actionable insights from big data – large volumes of data in different formats being ingested at high speeds. We take this high-quality data from thousands of sources in varying formats – both structured and unstructured. We then extract the data points from the content, link the data points and enrich them to make it analysable. Finally, we apply advanced statistics and algorithms, such as machine learning and natural language processing, to provide professional customers with the actionable insights they need to do their jobs.

We are continually building new products and data and technology platforms, re-using approaches and technologies across the company to create platforms that are reliable, scalable and secure. Even though we serve different segments with different content sets, the nature of the problems solved and the way we apply technology has commonalities across the company. [39]

In its 2022 Annual Report, RELX describes its business model as ingesting large quantities of data, linking them together, and deriving platforms from them. [39]

In its 2022 Annual Report, RELX describes its business model as ingesting large quantities of data, linking them together, and deriving platforms from them. [39]

While to any individual market segment or class of customers RELX and its subsidiaries might look like a portfolio of separate platforms and applications, one can only make sense of the company by thinking of each of them as a view on an interconnected graph of data11. Each additional source of data, either by acquiring new companies or by expanding their existing control of informational access points has the potential to create some combinatorically new set of opportunities for new platforms.

For example, RELX is able to gather surveillance data on researcher attention data through the tracking in its ScienceDirect and Mendeley platforms. It also collects a large amount of chemical data through its control of scientific publishing that it rents access to on its Reaxys platform, which is supplemented by its LexisNexis (another RELX subsidiary) PatentSight database of patents. So far so normal.

What about the other sides of the multisided market? RELX is able to combine these and other data sources into new product. For pharmaceutical R&D companies, their bespoke Drug Design Optimization services advertise being able to use chemical, disease, and literature-based data to generate a priority list of potential therapeutic targets and drugs, as well as provide “competitive intelligence” about which targets are currently being studied, presumably identified from their ownership of the scientific literature coupled with surveillance data. Since clinicians don’t trust pharmaceutical advertisements [40], Elsevier uses its position as a perceived neutral third party to repackage advertisements as informational systems [41], “journal-branded webinars,” as well as a number of other avenues via its “360 degree advertising solutions” catalogue. So, by combining several data sources and platforms, Elsevier is able to offer pharmaceutical companies recommendations for candidate drugs above and beyond what would be possible with chemical information alone and then advertise their drugs directly to doctors.

Derivative platforms beget derivative platforms, as each expands the surface of dependence and provides new opportunities for data to capture. Its integration into clinical systems by way of reference material is growing to include electronic health record (EHR) systems, and they are “developing clinical decision support applications […] leveraging [their] proprietary health graph” [39]. Similarly, their integration into Apple’s watchOS to track medications indicates their interest in directly tracking personal medical data.

That’s all within biomedical sciences, but RELX’s risk division also provides “comprehensive data, analytics, and decision tools for […] life insurance carriers” [39], so while we will never have the kind of external visibility into its infrastructure to say for certain, it’s not difficult to imagine combining its diverse biomedical knowledge graph with personal medical information in order to sell risk-assessment services to health and life insurance companies. LexisNexis has personal data enough to serve as an “integral part” of the United States Immigration and Customs Enforcement’s (ICE) arrest and deportation program [42, 43], including dragnet location data [44], driving behavior data from internet-connected cars [45], and payment and credit data as just a small sample from its large catalogue [46] of data aggregated and linked into comprehensive profiles [47]. The contemporary knowledge graph-powered surveillance conglomerate gains its versatility precisely from its ability to span many unrelated domains and deploy new platforms as opportunities present themselves. As new data sources are acquired, the combinatorics of possible surveillance products correspondingly explode.

This pattern is true across the information industry [30]. A handful of representatives from Microsoft, Google, Facebook, eBay, and IBM describe some elements of each of their knowledge graphs in a 2019 paper [26]. Each has different scopes, applications, and interaction with the other data and processing infrastructure at the company, but all emphasize the ability for their knowledge graphs to accommodate change, heterogeneity, conflicting data, inference, and facilitate work by distributed teams due to their self-documenting and modular nature. Neo4j, developers of an eponymous graph database library, describes in one case study among its hundreds of customers how the U.S. Army uses its “connected data” to track its equipment and estimate the cost of some new exploratory imperialism [48]. An analysis of Palantir’s hundreds of patents for knowledge graph technology (eg. [49, 50, 51, 52]) describes its ambitions for its knowledge graph:

There is evidence […] that Palantir has infrastructural aspirations to become a general classification system for data integration […] that can be tailored into a universal knowledge graph. […] Palantir similarly imagines a world where its platform might serve as a “shadow” universal knowledge graph for governments, industries, and organizations. [53]

Knowledge graphs as a technology - like all technologies - are not intrinsically unethical. It is the structure of the capital-K capital-G Knowledge Graph in its particular construction as a set of property and power relationships set against the context of the platform web that is pathological. They represent the historical trajectory of semantic web ideas and technologies from something that we are intended to use and create directly into privately held data that we can only interact with through platforms. They are coproductive with the corporate and technical structure of surveillance capitalism, facilitating conglomerates that gobble up as many platforms and data sources as possible to stitch them into an expanding, heterogeneous graph of data.

In particular, it is their “graph plus compute” structure - where some underlying graph of data is coupled with a set of algorithms and interfaces to view it - that is necessary to understand some of the more counterintuitive motivations of surveillance conglomerates. This structure complicates questions of “openness” versus “proprietariness,” and provides a different lens on ostensibly “open” or “public” knowledge graph-based infrastructure projects.

Public Graphs, Private Profits

Unqualified Openness Considered Harmful

If the problem is information conglomerates stockpiling a massive quantity of proprietary data and renting use of it, isn’t “open data” the answer? “Openness,” including open source, open standards, and open data, is a subtle tool that can be used both to dissolve and reinforce economic and political power and is particularly ill-suited as a counter-strategy for corporate knowledge graphs .

Free and open source software, with its noble (and decidedly non-monolithic [54]) goal of creating an ecosystem of free12 software, is a means by which large information companies can harvest the commons and outsource labor costs [55, 56, 57, 58, 59]. There are countless examples of FOSS developers maintaining software widely used by companies making billions of dollars for little or no compensation - eg. core-js [60], OpenSSL [61], leftpad [62], PLC4X [63] and so on. When an information company releases or supports an open source project it is rarely an act of altruism. The effect is to prevent another company from profiting from a proprietary version of that technology, signal virtue, drive recruitment, and create a centralized point to concentrate donated labor. Microsoft, a famously good actor in software, took this several steps further with GitHub, VSCode, and later Copilot, capturing a large chunk of the software development process in order to trick programmers to be the “humans in the loop” refining the neural network to write code and dilute their labor power [64, 65, 66, 67].

“Peer production” models, a more generic term for public collaboration that includes FOSS, has similar discontents. The related term “crowdsource13” quite literally describes a patronizing means of harvesting free labor via some typically gamified platform. Wikipedia is perhaps the most well-known example of peer production14, and it too struggles with its position as a resource to be harvested by information conglomerates. In 2015, the increasing prevalence of Google’s information boxes caused a substantial decline in Wikipedia page views [68, 69] as its information was harvested into Google’s knowledge graph, and a “will she, won’t she” search engine arguably intended to avoid dependence on Google was at the heart of its 2014-2016 leadership crisis [70, 71]. While shuttering Freebase, Google donated a substantial amount of money to kick-start its successor [72] Wikidata, presumably as a means of crowdsourcing the curation of its knowledge graph [73, 74, 75].

“Open” standards are yet another fraught domain of openness. For an example within academia, the seemingly-open Digital Object Identifier (DOI) system was concocted as a means for publishers to retain control of indexing research, avoiding the impact of the proposed free repository PubMedCentral and the high overhead of linking documents between publishers15 (see sec. 3.1.1 in [1]). The nonprofit standards body NISO’s standards for indicating journal article versions [76] and licensing [77] are used by publishers to enforce their intellectual property monopolies and programmatically scour the web to prevent free access to publicly funded information [78].

Schema.org, a standard intended to be the generic interchange ontology of the web, is another emblem of enclosure of the semantic web. Its introduction at the SemTech 2011 conference was cause for a rare point of agreement16 between the then-warring maintainers of RDFa and Microformats: “folks, it’s wrong for Google to dictate vocabularies, let’s not lose sight of that” [79]. Though ostensibly open, its structure and emphases have been roundly criticized, eg. having a eurocentric bias towards commercially valuable information [80]. It encourages website maintainers to embed Schema.org annotations in their pages in exchange for a boost in search rankings — which Google then embeds in its infoboxes, driving down page views. More fundamentally it cements the notion that Linked Data is something that we are only intended to use to make our information more available to some search engine crawler rather than make use of for ourselves: “In general, the design decisions place more of the burden on consumers of the markup” [81]. It encodes the notion that there should be one “neutral” means of representing information for one (or a few) global search engines to understand, rather than for local negotiation over meaning. According to the transcribed Q&A after its 2011 announcement, the Google representatives characterized the creation of authoring tools like those created to make creative use of HTML more accessible as a potential “alternative path,” but then dismissed the notion of improved tooling as “impossible” [82].

Clearly, on its own, mere “openness” is no guarantee of virtue, and socio-technological systems must always be evaluated in their broader context: what is open? why? who benefits? Open source, open standards, and peer production models do not inherently challenge the rent-seeking behavior of information conglomerates, but can instead facilitate it.

In particular, the maintainers of corporate knowledge graphs want to reduce labor duplication by making use of some public knowledge graph that they can then “add value” to with shades of proprietary and personal data (emphasis mine):

In a case like IBM clients, who build their own custom knowledge graphs, the clients are not expected to tell the graph about basic knowledge. For example, a cancer researcher is not going to teach the knowledge graph that skin is a form of tissue, or that St. Jude is a hospital in Memphis, Tennessee. This is known as “general knowledge,” captured in a general knowledge graph. The next level of information is knowledge that is well known to anybody in the domain—for example, carcinoma is a form of cancer or NHL more often stands for non-Hodgkin lymphoma than National Hockey League in some contexts it may still mean that—say, in the patient record of an NHL player). The client should need to input only the private and confidential knowledge or any knowledge that the system does not yet know. [26]

The creation of a collection of more domain-specific ontologies and tooling for ingesting previously unstructured data would allow for a new kind of globally linked knowledge graph ecosystem — making use of a broader range of publicly-available data, as well as facilitating new markets for renting access to interoperable data. Five information conglomerates conclude their joint paper on knowledge graphs accordingly:

The natural question from our discussion in this article is whether different knowledge graphs can someday share certain core elements, such as descriptions of people, places, and similar entities. [26]

Having such standards be under the stewardship of ostensibly neutral and open third-parties provides cover for powerful actors exerting their influence and helps overcome the initial energy barrier to realizing network effects from their broad use [83, 84]. Peter Mika, the director of Semantic Search at Yahoo Labs, describes this need for third-party intervention in domain-specific standards:

A natural next step for Knowledge Graphs is to extend beyond the boundaries of organisations, connecting data assets of companies along business value chains. This process is still at an early stage, and there is a need for trade associations or industry-specific standards organisations to step in, especially when it comes to developing shared entity identifier schemes. [85]

As with search, we should be particularly wary of information infrastructures that are technically open17 but embed design logics that preserve the hegemony of the organizations that have the resources to make use of them. The existing organization of industrial knowledge graphs as chimeric “data + compute” models give a hint at what we might look for in public knowledge graphs: the data is open, but to make use of it we have to rely on some proprietary algorithm or cloud infrastructure.

Unfortunately, that is exactly what at least two US Federal agencies have in mind: the NIH and NSF are both in the thick of engineering cloud-based knowledge graph infrastructures and domain-specific ontologies with all the trappings of technology that fills the stated needs of information conglomerates at the expense of the people it is outwardly intended to serve. I assume that the researchers and engineers working on these projects are doing so with the best of intentions. The object of criticism is not the individuals within these projects, but the ideologies and systems they are embedded within. I will describe those efforts and their already apparent harms as a way of understanding how these technologies illustrate and reinforce the dominance of the existing corporate informational ecosystem — and to articulate an alternative.

NIH: The Biomedical Translator

Note:

This section is reproduced from, focuses, and expands on “Linked Data or Surveillance Capitalism?” from [1].

The NIH’s Biomedical Data Translator18 project was initially described in its 2016 Strategic Plan for Data Science as a means of translating between biomedical data formats:

Through its Biomedical Data Translator program, the National Center for Advancing Translational Sciences (NCATS) is supporting research to develop ways to connect conventionally separated data types to one another to make them more useful for researchers and the public. [86]

The original funding statement from 2016 is similarly humble, and press releases through 2017 also speak mostly in terms of querying the data – though some ambition begins to creep in. By 2019, the vision for the project had shifted from translating between data types into the realm of heterogeneous linkages in some meta-level system for linking and reasoning over them.

In their piece “Toward a Universal Biomedical Translator,” then in a feasibility assessment phase, the members of the Translator Consortium assert that universal translation between biomedical data is impossible19[87]. The impossibility they saw was not that of conflicting political demands on the structure of organization (as per [88]), but of the sheer quantity of the data and vocabularies needed to describe them. The risk posed by a lack of a universal “language” was not being able to index all possible data, rather than inaccuracy or inequity20.

Undaunted by their stated belief in the impossibility of a universalizing ontology, the Consortium created one in their biolink model21 [89, 90]. Biolink consists of a hierarchy of general22 classes: eg. a BiologicalEntity like a Gene, or a ChemicalEntity like a Drug. Classes can then linked by any number of properties, or “Slots23.”

Biolink was designed to be a sort of “meta ontology,” or a means of mapping different domain-specific biomedical ontologies onto a common vocabulary24. As a meta-ontology, Biolink is targeted towards “meta-data.” Rather than accommodating “raw data25,” Biolink is expected to operate at the level of “knowledge,” or “generally accepted, universal assertions derived from the accumulation of information” [91]: this procedure treats that disease, this chemical interacts with that one, etc.

The primary way Biolink is used within the Translator is to structure a registry of database APIs, each called a “Knowledge Source.” Knowledge Sources use Biolink to declare that they are able to provide assertions about a particular set of classes or slots, like drugs that affect genetic expression, which makes them part of the Translator’s distributed Knowledge Graph. The Translator project, in this universalizing impulse, recapitulates some of the early beliefs of the Semantic Web updated with some of the techniques of Linked Data.

This structure strongly constrains who is intended to be able to contribute to the Translator: highly curated biomedical informatics platforms, rather than basic researchers or the public at large. NIH RePORTER shows a series of grants for small councils of experts to create domain-specific ontologies and Knowledge Sources. This, in turn, reflects deeper beliefs about the nature of information within the Translator ecosystem: “knowledge” is not a social, contextual, or dialogical phenomenon, but a “natural resource” that can be mined from information that is “out there.” A scientific paper is a neutral carrier of a factual link between entities. The meaning of “translation,” in some uses, has shifted from translating between data formats, to “translating information into knowledge” [87]. This is, of course, the ideology of Big Data: “when heterogeneous networks are connected at a massive scale, new knowledge can be extracted as an emergent property of the network” [92]. The Translator seems to imagine its project as a refinery, converting crude data into Knowledge that can fuel platforms.

The platforms that the translator imagines are those where clinicians or researchers can pose plain language queries and have answers returned by some algorithmic “reasoning agent” that aggregates data from multiple Knowledge Providers and synthesizes a response [90, 93, 94, 95, 96]. We are not intended to look too closely at the data from Knowledge Providers, as it is likely to be incomplete or conflicting.

Several pilot experiments have demonstrated combining some aggregated patient records with the broader knowledge graph in order to eg. identify new risk markers for disease [92, 97, 98, 99]. These systems layer personal records underneath “general” biomedical information like drug interactions and biological processes and use the extended information from the graph to infer information both about the nature of the disease and the patient. A platform integrated with the UCSF electronic health record system that layers disaggregated clinical records under the general knowledge graph is already apparently in a state of mature development [100].

It is only with the inclusion of patient records into the knowledge graph that it becomes possible to use in a clinical setting: for even basic queries like “which drugs treat this disease” one has to be aware of patient qualities like allergies and comorbid conditions. To know how to treat the generic diagnosis of “gender dysphoria,” one needs to know which gender the patient is experiencing dysphoria about. The logic of knowledge graph makes it not just hungry for some personal medical data, the promise is that more data always improves its results26.

Why might we be critical about the NIH funding a series of projects to unify biomedical and personal health data in some universalized, platformatized knowledge graph? In short: because it won’t work as intended, its partially-working components will have immediately harmful results, and it will inevitably be captured by the surveillance industry.

First, as with any machine-learning based system, the algorithm can only reflect the implicit structure of its creation, including the beliefs and values of its architects [101, 102], its training data and accompanying bias [103], and so on. The “mass of data” approach ML tools lend themselves to, in this case, querying hundreds of independently operated databases, makes dissecting the provenance of every entry from every data provider effectively impossible. For example, one of the providers, mydisease.info was more than happy to respond to a query for the outmoded definition of “transsexualism” as a disease [104] along with a list of genes and variants that supposedly “cause” it - see for yourself. At the time of the search, tracing the source of that entry first led to the disease ontology DOID:1234, which has an official IRI, but in this case was being served by a graph aggregator Ontobee (Archive Link), which in turn listed this unofficial GitHub repository maintained by a single person as its source27. This is, presumably, the fragility and inconsistency in input data that the machine learning layer is intended to putty over.

If the graph encodes being transgender as a disease, it is not farfetched to imagine the ranking system attempting to “cure” it. A seemingly pre-release version of the translator’s query engine, ARAX, does just that: in a query for entities with a biolink:treats link to gender dysphoria28, it ranks the standard therapeutics [105, 106] Testosterone and Estradiol 6th and 10th of 11, respectively — behind a recommendation for Lithium (4th) and Pimozide (5th) due to an automated text scrape of two conversion therapy papers29. Queries to ARAX for treatments for gender identity disorder helpfully yielded “zinc” and “water,” offering a paper from the translator group that describes automated drug recommendation as the only provenance [107]. A query for treatments for DOID:1233 “transvestism” was predictably troubling, again prescribing conversion therapy from automated scrapes of outdated and harmful research. The ROBOKOP [108] query engine behaved similarly, answering a query for genes associated with gender dysphoria with exclusively trivial or incorrect responses30.

It is critically important to understand that with an algorithmic, graph-based precision medicine system like this harm can occur even without intended malice. The power of the graph model for precision medicine is precisely its ability to make use of the extended structure of the graph31. The “value added” by the personalized biomedical graph is being able to incorporate the patient’s personal information like genetics, environment, and comorbidities into diagnosis and treatment. So, harmful information embedded within a graph — like transness being a disease in search of a cure — means the system either a) incorporates that harm into its outputs for seemingly unrelated queries or b) doesn’t work. This simultaneously explodes and obscures the risk surface for medically marginalized people: the violence historically encoded in mainstream medical practices and ontologies (eg. [104, 109], among many), incorrectly encoded information like that from automated text mining, explicitly adversarial information injected into the graph through some crowdsourcing portal like this one [110], and so on all presented as an ostensibly “neutral” informatics platform. Each of these sources of harm could influence both medical care and biomedical research in ways that even a well-meaning clinician might not be able to recognize.

The risk of harm is again multiplied by the potential for harmful outputs of a biomedical knowledge graph system to trickle through medical practice and re-enter as training data. The Consortium also describes the potential for ranking algorithms to be continuously updated based on usage or results in research or clinical practice32 [87]. Existing harm in medical practice, amplified by any induced by the Translator system, could then be re-encoded as implicit medical consensus in an opaque recommendation algorithm. There is, of course, no unique “loss function” to evaluate health. One belief system’s vision of health is demonic pathology in another. Say an insurance company uses the clinical recommendations of some algorithm built off the Translator’s graph to evaluate its coverage of medical procedures. This gives them license to lower their bottom line under cover of some seemingly objective but fundamentally unaccountable algorithm. There is no need for speculation: Cigna already does this [111]. Could a collection of anti-abortion clinics giving one star to abortion in every case meaningfully influence whether abortion is prescribed or covered? Why not? Who moderates the graph?

The centralized structure of the Translator’s Knowledge Providers and query engines make a small group of experts responsible for curating the entire structure of biomedical information. The curation process could be “crowdsourced” to allow affected communities to suggest improvements, but the platformatized nature of the Translator both concentrates decisionmaking power and diffuses responsibility across a string of platform holders. Who is supposed to fix incorrect or harmful query responses? Is it the responsibility of the potentially dozens of Knowledge Providers, the swarm of reasoning agents, or the frontend wrapper you pay a monthly subscription for? It is the platformatized nature of the Translator itself that creates the need for centralized moderation in the first place. The design of the Translator to evolve into a series of “user-“ or customer-facing platforms that aspire to universality binds it to all the regulatory burden any biomedical technology bears. The cost of moderation will of course be enormous, placing a fundamental constraint on its lifespan as a publicly funded project — and a strong incentive towards co-option by the information conglomerates capable of paying it33.

These problems hint at the likely fate of the Translator project. Rather than integrating into the daily practice of researchers, the centralized process of creating Knowledge Providers can only be maintained for as long as the grant funding for the Translator project lasts. When queried at the time of writing, of the 25 knowledge providers that were responsive to information about “Anything that is related to the common cold,” 22 were unresponsive or timed out.

How the Translator is intended to work by its architects is almost irrelevant compared to the question of what happens to it after the project ends. Linking biomedical and patient data in a single platform is a natural route towards a multisided market where records management apps are sold to patients, treatment recommendation systems are sold to clinicians, research tools and advertising opportunities are sold to pharmaceutical companies, risk metrics are sold to insurance companies, and so on. The contours of this market are already clear.

As a non-exhaustive set of examples:

- I have already described RELX’s interest in personal biomedical data. Their 2022 Annual Report [39] is the first year where they explicitly describe their entrance into the patient data market34. RELX is a particularly worrying example because of their established roles among academics, governmental entities, medical systems, and insurance providers.

- Amazon already has a broad home surveillance portfolio [112], and has been aggressively expanding into health technology [113] and even literally providing health care [114, 115], which could be particularly dangerous with the uploading of all scientific and medical data onto AWS with entirely unenforceable promises of data privacy through NIH’s STRIDES program [116].

- Google already includes medical conditions in its surveillance-backed advertising profiles [117, 118], and is edging its way into wearable health data with eg. its acquisition of FitBit [119]. It also already has a system, Med-PALM, for biomedical question answering based on large language models [120, 121, 122]. Search is a primary entrypoint for many people seeking health information, and Google presumably would be more than happy to merge that data with a generalized biomedical knowledge graph.

- Apple already has a matured Health ecosystem of apps and services for both patients, clinicians, and researchers [123, 124] and has a similar exposure to relevant data and control of platforms (iOS, watchOS) to make use of it, though they have marketed themselves in the surveillance space as a defender of privacy.

- Of course Microsoft [125] and IBM [126] are also in play.

The design of the Translator project reflects the prevailing logic of the surveillance economy as powered by knowledge graphs, and is poised to be swallowed up by it. Rather than a means for us to collectively make sense together, it imagines a cloud-driven system where a small group of experts wave a wand of unknowable algorithms over a bulging plastic trash bag of data to pull out the Magic Knowledge Rabbit. The noble intention of making a generalized biomedical knowledge graph for the public good is unlikely to be realized. In the process, though, the NIH will have funded facilitating technologies and standards for the merger of personal electronic health records with the broader landscape of biomedical data. Academics will have new vectors by which they become unwitting or unwilling collaborators35 with surveillance and data brokers, lending what credibility they have left to a landscape of buggy black boxes of biopolitical control. And, most importantly, vulnerable populations will have dozens of new ways to be marginalized by the techno-political medical establishment.

NSF: Open Knowledge Network

While the NIH builds a set of universal knowledge graphs for biomedical information, the NSF is building them for everything else. Its Open Knowledge Network (OKN) project intends to “provide an essential public-data infrastructure for enabling an AI-driven future.” [127] Compared to the Translator, the OKN pulls punches for neither its utopian promises nor obvious risks. Some sections of its roadmap are written in the breathless tenor of Big Data solutionism, claiming that “harnessing the vast amounts of data generated in every sphere of life and transforming them into useful, actionable information and knowledge is crucial to the efficient functioning of a modern society” [127]. Without mincing words, the OKN intends to make a Universal Knowledge Graph of Everything. The recipe is familiar: a) make authoritative schemas for everything, b) link them all together, c) ingest data from as many sources as possible at whatever quality available, d) integrate private with public data e) put it all in the cloud! (p. 18-19 “Creating an OKN” [128]).

The project was initially proposed in 2017, went through two cohorts of projects within the NSF Convergence Accelerator in 2019 and 202036, and invited a broader submission of proposals in November 2021 [129]. The roadmap comes at the end of a series of workshops in 2022 intended to scope and outline the OKN so there is still very little public evidence of its progress to evaluate37, but along with the Translator, what is available tells the story of an emerging consensus for public data infrastructures.

Its domain is much broader than the Translator, and is unmistakably bound up in both the United States Federal Government’s military and political interests in Artificial Intelligence38 [130] and the information economy’s interests in making a universal space where all information can be bought and sold with minimal friction39 [128]. Where the Translator has the near-inevitable risk of being captured by information conglomerates, through the euphemism of “public private partnership” the OKN makes clear it intends capture by for-profit entities as part of its design: for example, the team behind the SPOKE biomedical knowledge network immediately spun off a for-profit startup to sell the graph as a cloud service [131], abandoning further UX development of its publicly accessible demo.

They OKN describes its work along “vertical” and “horizontal” dimensions, where “vertical” applications refer to specific uses or domains like energy or health data, and “horizontal” themes like technologies and governance are shared across all domains. The collection of “vertical” topics identified in the 2022 roadmap hint at the effectively unbounded scope of the OKN: accelerated capitalism via supply chain logistics, more tightly integrated weapons development, a handful of climate change projects, an omniscient financial system, and so on. Each imagines the primary problem in a given domain not as structural exploitation or injustice, but a lack of data40.

The “vertical” topical working groups in the 2022 roadmap centered on an algorithmic justice system are particularly illustrative: An Integrated Justice Platform group describes the need for greater surveillance across every contact people have with the US Justice System in a wish list of data sources that should be integrated - arrest and booking, jail, trial, prosecution, and the rest. A Decarceration group41 describes extending that surveillance through to the rest of incarcerated people’s lives after they are released - rehab, parole, foster care, shelters, public services, etc. A Homelessness group intends to track unhoused people in order to match them to available resources. A Decision Support for Government42 group describes bundling up these and other data sources into platforms for making “data driven decisions” on topics including crime and policing.

On their own, each of these groups describes noble goals: decreasing bias in the justice system, providing resources to formerly incarcerated or unhoused people, making government decisions more efficient. Taken together, however, the projects describe a panoptical surveillance system that wouldn’t even need to be reconfigured to be used for algorithmically-enhanced oppression. I doubt any of the researchers in these groups intend for their work to be used for state violence, but Palantir doesn’t care what academics intended their tools to be used for43.

The motivations behind integrating government data sources and automating public benefit delivery cannot overcome the context of systemic oppression they are embedded within. Group H, the “Homelessness OKN” group, takes particular effort44 to focus on the needs of the unhoused and address the potential risks of “track[ing] homelessness in real time, [and] identify[ing] available homelessness programs and services,” but misses the already-real harms of similar prior efforts. Virginia Eubanks describes how Los Angeles County’s Coordinated Entry System — a program very much like that described by group H, intended to match unhoused people with housing supply by integrating previously siloed data systems — operates as a sophisticated mechanism of control and punishment:

For Gary Boatwright and tens of thousands of others who have not been matched with any services, coordinated entry seems to collect increasingly sensitive, intrusive data to track their movements and behavior, but doesn’t offer anything in return. […] Moreover, the pattern of increased data collection, sharing, and surveillance reinforces the criminalization of the unhoused, if only because so many of the basic conditions of being homeless are also officially crimes. […] The tickets turn into warrants, and then law enforcement has further reason to search the databases to find “fugitives.” Thus, data collection, storage, and sharing in homeless service programs are often starting points in a process that criminalizes the poor. […]

Further integrating programs aimed at providing economic security and those focused on crime control threatens to turn routine survival strategies of those living in extreme poverty into crimes. The constant data collection from a vast array of high-tech tools wielded by homeless services, business improvement districts, and law enforcement create what Skid Row residents perceive as a net of constraint that influences their every decision. Daily, they feel encouraged to self-deport or self-imprison. Those living outdoors in encampments feel pressured to constantly be on the move. Those housed in SROs or permanent supportive housing feel equally intense pressure to stay inside and out of the public eye. […] Coordinated entry is not just a system for managing information or matching demand to supply. It is a surveillance system for sorting and criminalizing the poor. [132]

It is impossible to consider integrated data in government without confronting the reality of algorithmic policing. Under its Strategic Plan goal of “Realiz[ing] Tomorrow’s Government Today” Los Angeles County has already been integrating its information systems, including creating a unified system of law enforcement and other public service data “to identify super utilizers of justice and health system resources”45 [133, 134]. Many police departments — including the LAPD — already have access to the kind of linked data ecosystems described by the OKN by renting them from private data brokers like Palantir [135, 136]. These data infrastructures facilitate the well-described feedback loop of predictive policing, where areas already subject to historical economic and racist violence are classified as “high-crime areas,” more police are concentrated there, in turn causing them to measure or create more crime46 [135, 137, 138, 139, 140, 141, 142]. The reformist idea that more data will help us “police the police” is belied by the resolute history of more data allowing the police to innovate on information asymmetries to create new expressions of power [143, 144].

The critical difference between prior infrastructures and those imagined by the OKN is that they are explicitly designed to be linked into a continuous network of data that enables the same kind of data-driven decisionmaking that drives predictive policing for any system. We should not be imagining the utterly mechanistic bureaucracy of Kafka here, but rather the deeply expressive and personal exercise of power of Terry Gilliam’s Brazil. Widespread algorithmic governance doesn’t necessarily look like a faceless bureaucracy where all decisions are made by a computer, existing algorithmic systems like predictive policing and the working conditions at Amazon warehouses retain the very human domain of discretion (see [143]). The algorithms and seemingly open infrastructures of these two projects purport themselves as objective and egalitarian, but who they are built for, who gets to provides the inputs, and who decides which outputs matter make their reality very different.

A report from Wired and Lighthouse Reports that gained unprecedented access to an algorithmic social service system created by Accenture for the city of Rotterdam shows how the discretion of caseworkers and a purportedly “objective” algorithm together create a profoundly discriminatory system [145, 146]. Caseworkers make subjective determinations like an applicant showing signs of low self-esteem or whether they can “deal with pressure” and feed them along with characteristics like age and gender into an opaque set of decision trees to determine whether they should be investigated for benefits fraud. The opacity of the system makes it rich with opportunities for discretionary bias that, again, can be both intentional and unintentional. For example, the mere presence of a comment on motivation or attitude increases ones likelihood of being flagged for investigation, even if that comment is positive. Intentional and unintentional welfare fraud are undifferentiated in the training data, making language barriers — a source of accidental fraud from not understanding the system — a primary determinant of investigation. In the case of the OKN, merging data from many governmental systems under the aegis of algorithmic fairness could do precisely the opposite: expanding the points of discretionary control where opaque decisions in input data or application of an algorithm can have long range impacts on governmental outcomes.

While it is still too early to evaluate the OKN as a project, it along with the Translator show the outlines of public information infrastructures to come.

The two major public research funding agencies in the US have both devised novel funding mechanisms to be able to bypass typical review and include private industry in their data infrastructure projects [147, 148]. These data infrastructures consist of a number of sub-projects for building new domain-specific and universalizing Semantic Web ontologies and cloud-based platforms for data storage and retrieval. Both are both explicitly oriented towards exposing structured data to “AI” and other derivative “big data” applications, rather than towards integrating in the daily work of researchers or the public at large. The potential for harm from big data solutionism, corporate capture, and discretionary abuse is common to both projects. These and other47 efforts like NIH’s STRIDES initiative point towards a cloud-driven SaaS/PaaS future for public data infrastructure [149].

The Translator and OKN and their sub-projects have many possible fates: their grant funding could peter out and they could amount to very little beyond the scattered prototypes and spinoff startups that they’ve currently produced — a mere wasted opportunity. They could flourish and become exactly what their creators intend them to be - the seamless data infrastructures of the future that manage to miraculously avoid all potential harms.

More important than the outcomes of these projects in particular is how the ruts of collective imagination drive both projects towards very similar designs with very similar flaws. It is not the technologies in themselves that are pathological, but the way they are imagined as part of a larger socio-political system: who is intended to use them, to have power within them, to own them? These projects presuppose an enlightened technocrat class as the principle agent of social good and configure technologies accordingly. The grand unified graph of everything will allow the truth to emerge from the Big Data so that decisionmakers can divine what is best for the commoners who could not possibly understand the complexities of their health, environment, or social systems themselves. This belief finds fertile ground among academics who intend to do good but have little incentive to critically evaluate the surrounding political-economic systems that might structure the form that good might take48.

Maybe paradoxically, the aspirations of universality strongly constrain their ambition and use. By punting the more foundational questions of creating storage and compute infrastructure to the cloud, there is no place for “raw” data since it is too unwieldy to affordably host or handle. By recapitulating the focus of the early semantic web on universalizing ontologies rather than tooling for arbitrary expression, the projects hem themselves in to only what its creators can imagine either in the ontologies themselves or the ways their expansion are governed. By needing to present themselves as singularly “true” and reliable, they are less able to represent ambiguity and uncertainty — which are ultimately “truer” representations of most kinds of Knowledge. By adopting the patterns of the industries that enclose us within similarly limited platforms, they are doomed to re-entrench rather than liberate us from the engineered helplessness that makes it hard to fluidly express and make use of information in the first place.

These design logics and the technologies they produce must be understood against the backdrop of the history and present structure of the platformatized cloud-driven information economy writ large. Facing the limits of proprietary ontologies in private knowledge graphs, the information industry wants a set of cross-domain “top level” ontologies to enable the smooth interchange of public information that can then be integrated with “lower-level” private ontologies for an even greater array of surveillance-backed knowledge-as-a-service platforms. Under the guiding star of openness as an end in itself, researchers and funding agencies seem keen to provide it, and in partnership with private industry have adopted the logic of their platforms.

Infrastructural Ideologies

The Cloud is not a neutral, inevitable, or optimal form of the web — it has been actively constructed to facilitate a particular set of power and property relationships that make up the web’s dominant business model. It is supported by a system of values and beliefs that are consciously affirmed to various degrees in a positive feedback loop with the expertise and resource investment that make its enabling technologies more developed and obvious than alternatives, in turn fueling the truth of those beliefs, including that of the inevitability of the cloud model itself.

The history of the web is an odd substance: always present and eternal, yet profoundly ephemeral and immediately forgotten. It becomes increasingly difficult to imagine obscure roads not taken in the deeper architecture of the internet49 with every fork. Before the dominance of compute in the cloud, distributed computing projects like folding@home were more powerful than any supercomputer50 [150]. Before the dominance of cloud video streaming platforms, peer-to-peer systems accounted for a majority of global internet traffic: in the mid-2000’s between 49% and 95%, depending on the survey [151, 152].

The Cloud paradigm is at once phenomenally successful and riddled with obviously undesirable qualities. Cloud services promise large volumes of hassle-free storage — but also make our data take a round trip across the planet if we want to transfer it between computers in the same room. Cloud systems are impressive feats of engineering, capable of serving immense quantities of data from relay CDNs dotted around the globe — but only need to do so because of the preposterous inefficiency of needing to re-serve data like streaming video in full each time they are accessed. Cloud systems can be made to have very high uptime, but when they do go down their dramatic centralization causes massive internet-wide blackouts even for systems that only depend on them indirectly [153, 154]. Delivering cloud platforms through the browser requires less setup than local software, but the complexity of the underlying web standards make it effectively impossible [155] to escape the near-monopoly51 of Chrome52, and make many services completely unavailable if the internet goes out or even slows down.

That these trade-offs are either not considered or seen as the natural constraints of internet technologies is precisely the evidence of The Cloud as ideology. By treating The Cloud as a system of belief we can better understand how its acolytes imagine the world they are creating — and what they have in store to get us there. In particular, it is only possible to understand the meaning and intention of the surge of chatbots like chatGPT, Microsoft’s integration into Bing, and Google’s Bard as the logical conclusion of both the Cloud Orthodoxy and the history of Knowledge Graphs as a universal acid in data infrastructures. Finally, reopening the avenues foreclosed by its structuring beliefs, we will propose an alternative in Vulgar Linked Data.

The Cloud Orthodoxy

Ideology evades any singular definition, and I’m not obnoxious enough to claim I have a Complete and True Perspective53 on something as multifarious as the belief system underlying The Cloud as an infrastructural pattern.

To set the Terms and Conditions of this section: this definition is a necessary strawman to make sense of patterns of outcomes and pose as contrast to our alternative. I describe the Cloud Orthodoxy as a belief system because none of its components are unique or necessary for any one person to believe, but they are mutually reinforcing and self-compatible. It is one of many ideologies active in this cluttered space, including immortality cults like longtermism and good old fashioned neoliberalism. Many of these beliefs are not “bad” in themselves — assuming that the adherents of an ideology don’t believe they are “bad” people is a foundational part of trying to understand them. By describing it as a positive vision, I am omitting the brutal reality of surveillance, control, and profit extraction that it generates. These ideas of course draw on a mountain of prior thought54, and I admit my relative inexperience, welcome critique and contextualization, and will certainly need to completely rewrite them in future work.

My argument here is that the people and companies involved with these technologies don’t have an “ethical deficit” that might call for “more ethics in AI,” but that The Cloud poses its own strong ethical doctrine.

The Terms and Conditions having been settled…

A cardinal value of Cloud Orthodoxy is convenience. The internet should be fast, reliable, and everything55 should be available on demand. Convenience is elevated at the exclusion of other values when in conflict like shared power or flexibility. Complexity is a cognitive nuisance for people with otherwise busy full lives, so it should be hidden as much as possible. Interface design is a major point of competition between platforms because it is a primary method of obscuring complexity.

The world is asymmetrical and hierarchical. I am a consumer, a user and I trade my power to a developer or platform owner in exchange for convenience. The purpose of the internet is for platform holders to provide services to users. As a user I have a right to speak with the manager, but do not have a right to decide which services are provided or how. As a platform owner I have a right to demand whatever the users will give me in exchange for my services. Services are rented or given away freely56 rather than sold because to the user the product is convenience rather than software. Powerlessness is a feature: users don’t need to learn anything, and platform owners can freely experiment on users to optimize their experience without their knowledge. Information is asymmetrical in multiple ways: platforms collect and hold more information than the users can have and parcel it back out as services. But also, platform holders are the only ones who know how to create their services, and so they are responsible for the convenience prescribed for a platform but not the convenience of users understanding how to make the platform themselves.

The Platform has agency. Computational “agents” or microservices are dispatched by the platform, not by you. The Platform provides a fixed set of features with a fixed set of affordances. The Platform harnesses Users57 and creates possibilities — without the Platform they have nothing, The Platform provides everything. Users make Content for the Platform either explicitly or implicitly eg. via crowdsourced labor like training spam filters, reporting bots, reinforcing network effects by usage, and so on, which increases its value for other users. Users are fundamentally interchangeable and isolated from one another. The existence of sociality or community is a service provided by the Platform. The Platform Personalizes: Users are interchangeable but not homogeneous, and The Platform uses their Content to create a private reality for each User. Users are unreliable — they lie, cheat, and subvert the game established by the Platform, so only the Platform can ensure safety and reliability.

Information is a commodity. The commodity form of Information is Data. Information is a natural resource to be mined. Information is something that users consume. Ambiguity is a bug - information is true or false, and there is a single True way of describing the world regardless of context or positionality58. Data that does not conform to the correct schema is unclean. The highest goal of all data is to be machine readable. Provenance is a matter of estimating degree of certainty about Truth, not situating information in its context. More data is better59. Uncertainty is a deviation from some underlying True value and can be fixed by having more or higher quality data [156]. Where users make content, the Platform reveals insights from a large enough dataset by applying the right algorithmic computation or reasoning agent — the platform refines data into Knowledge60. The Platform knows better than individual, atomized users because it has more data than them, and so the Platform should collect as much of their data as possible to provide them the best service. It is impossible or inconvenient for users to make use of all the world’s data, so the role of the Platform is to provide Knowledge as a service by algorithmically sorting feeds, providing summaries, and so on. Privacy is at the discretion of the Platform, since data is needed to make derivative services that ultimately benefit the user. If the user doesn’t like this arrangement, they are free to not use the Platform. The benefit of the platform doesn’t necessarily need to be for the particular user who is providing data or content — The Platform matches different kinds of users like advertisers to customers, law enforcement agencies to suspects, etc. in order to maximize the overall value of all Platforms.

The Near Future of Surveillance Capitalism: Knowledge Graphs Get Chatbots.

Given that positive caricature of the Cloud Orthodoxy, what is the future it imagines, and why is the addition of chatbots to knowledge graphs of central importance?

The construction of search — particularly single-bar search a la Google — as the primary means of information retrieval on the web is not epiphenomenal to its history or structure. The problem that search addresses is an overload of information: if there were only 5 websites, search would be unnecessary. Before Google, search engines were littered with categories and rich with “advanced search” parameters common in other, more constrained search contexts to specify coordinates in the overload. The single bar search paradigm61 is simply more convenient than rifling through categories or preparing structured queries. Its convenience, of course, naturally trades off with the amount of information present in a query, and thus the ability to specify precisely what you’re after.

Imprecision in search, when calibrated correctly, is a feature not a bug62. The cognitive expectation of indexical or “advanced” search in a finite database is that it is possible to “reach the bottom” of it — given my query, if something was here I would be able to find it. Conversely, it would be very obvious if a result that didn’t match your query was included in the results. It is by, perhaps counter-intuitively, cultivating the expectation of imprecision that it becomes possible to embed ads or other sponsored content in results63. It’s a delicate dance: if you are presented with exactly the correct link at the top of a page of results, you don’t spend enough time in the feed to be advertised to. If the results are too low quality, searchers might look elsewhere.